Subscribe to our emails

Be the first to know about new collections and special offers.

Battery Capacity(mAh): 3000mAh

Battery Included: Yes

Battery Type: Not Detachable

Built-in GPS: No

Camera Equipped: Yes

Dimensions: 222*174*157mm

High-concerned chemical: None

IP68/IP69K: No

Language: Arabic,Polish,German,Russian,French,Korean,Norwegian,Portuguese,Japanese,Turkish,Spanish,Italian,English

Origin: Mainland China

Plug Type: USB-TYPE C

Power Source: Battery

State of Assembly: Ready-to-Go

Product Description

Dear customer, thank you for purchasing this product. Al Robotic pet have a pet-like soft touch, can access the cloud multi-modal Al model online, and intelligently communicate with you and answer questions; TA also has the ability to perceive the environment, so that it can interact with you more intelligently and express its small emotions through actions. expressions and sound effects. I hope TA can accompany you and bring joy to your Life.

Product Name: Al Pet Robot

Package Includes: 1*AI Robotic Pet

1*Product instruction

1*USB Charging Cable

Dimensions: 222 x 174 x 157 mm

Materials: ABS+PC / Nylon / PMMA

Speaker: 80 / 3W

WLAN: 802.11b/g/n

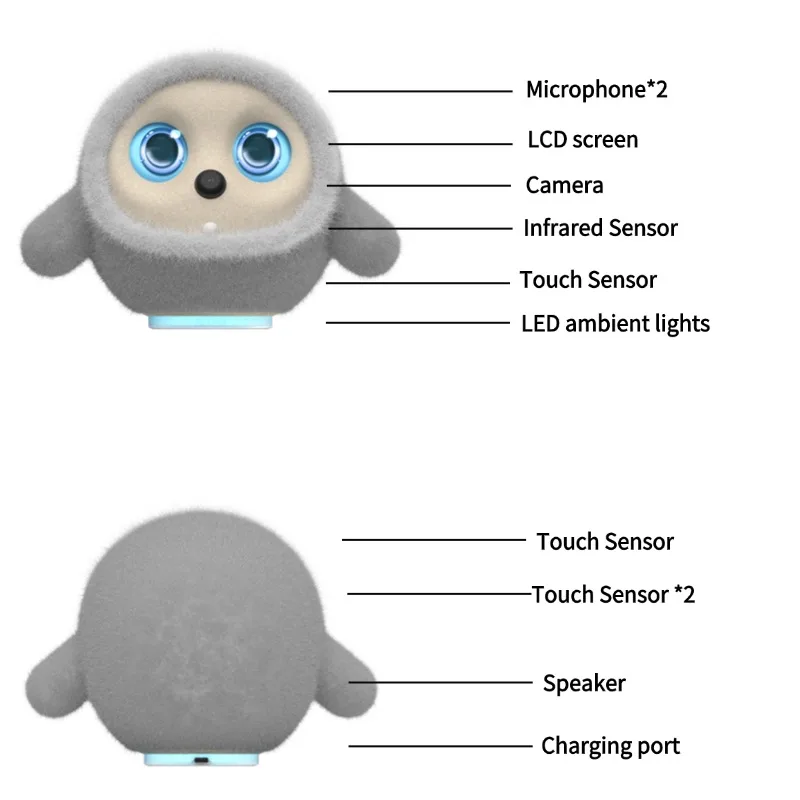

Microphone: 2*

Touch Sensors: 4x

Battery: 3000mAh Lithium-ion Battery / 7.4V

Charge: USB Type-C / 5V == 2A

Operating Environment: Temperature 10C -40 C

Humidity 0%-85%RH

Microphone: Captures External Audio Information

Microphone: Captures External Audio Information

LED Screen: Shows emotion animations and system key infor-mation

Camera: captures external visual information Infrared Pyro-electric

Infrared Sensor: Low-Power sensing of biological activity

Touch Sensors: Positioned on the belly, top, and back for human hand touch detection

LED ambient Strip: Indicates System Status and Creates Atmosphere

Speaker: Plays Volce and Sound Effects

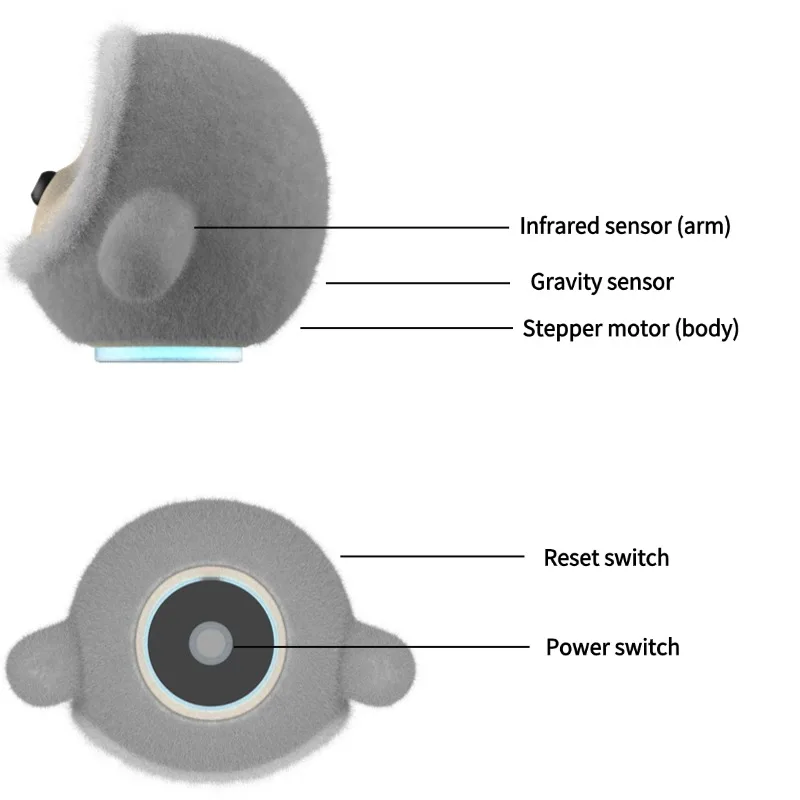

Stepper Motor: Drives Body and Hand Movements Gravity Sensor: Detects the Device's Motion Status

USB Type-C: Charging Port

Power: Long press for 3 seconds to turn on/off, double-click to display camera screen and hotspot name

Restart: Force restart the system

Outer Flannel Fabric: Removable and Washable, Replaceable

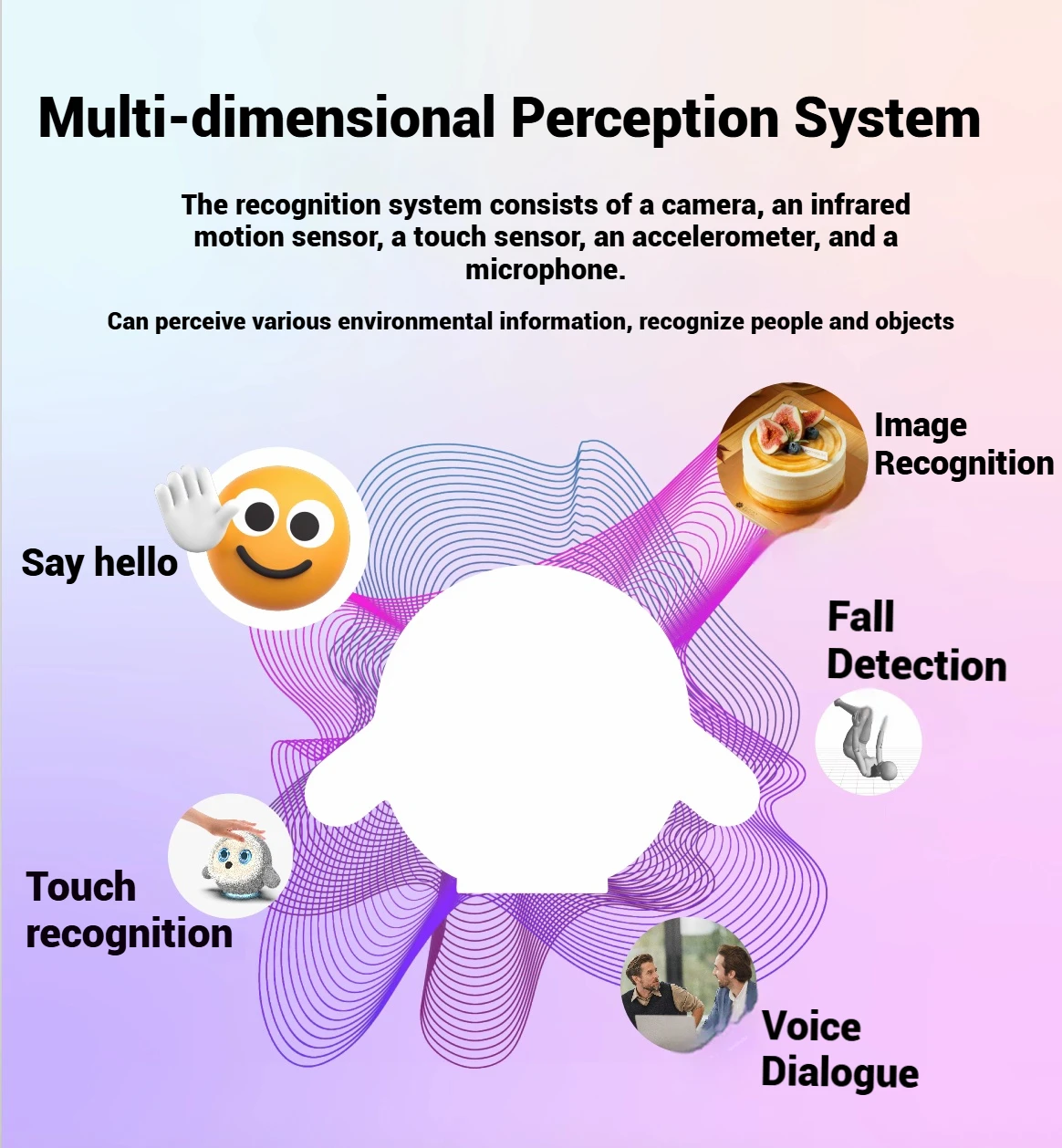

Core functions

Emotional expression:

Emotional expression is performed according to user mode, language, and behavior, and after the user responds, turn on the AI big model chat mode

Set emotional expression:

According to the system settings, after triggering the setting response, the user will turn on the AI big model chat mode after replying; set emotional expressions will be explained in detail below.

Implementation method: express your emotions through the screen, body and hand stepper motor, full-color LED light strips, and speaker sound effects

Set emotional sensing method:

There are 7 sensing methods that can trigger the emotional expression of cute pets, and you can easily have a sense of life.

Touch sensing: Touch the head, both sides of the back, and abdomen

Gravity sensing: can sense fall and pick it up

Voice sensing: can recognize multiple voice commands

Item identification: can identify the environment and items

Biometrics: Recognizes organisms and behaviors

Scenario application:

It can not only be used as a cute pet for companionship, but also as a "little employee", but it is more practical

Applied to the store: act as a waiter and welcome function;

Applied to live broadcast: act as an anchor and increase interactivity;

Applied to dinner parties: act as a waiter and liven up the atmosphere;

Applied to school: act as a little teacher, answer questions and answer questions.

Applied to...

Multilingual interaction:

Suitable for all language groups around the world, supports multilingual interaction, and provides people around the world with uninterrupted communication and warm companionship.

1. Single-user interaction can automatically recognize language and give relative language responses;

2. Single-user interaction can provide multi-language responses;

3. Multi-user interaction, automatically recognize each user language, and can act as a translation role;

Personalized settings

Product Settings:

Personalize the product and manage it

(1) Dialogue mode (free mode, wake-up mode)

(2) Device networking (local mode, AI dialogue)

(3) Character switching (poisonous tongue, heroine, teacher, cute, chatterbox, funny...)

(4) Tone switch (girl, boy, young man, young woman, custom...)

(5) Voice switching (Chinese, English, French, Russian, Arabic, Spanish, dialect...)

Situation perception:

Through touch sensors, microphones, cameras, infrared detection sensors covering the head and back, we can sense the surrounding environment and interact with each other.

Scenario understanding:

Fusion of voice input and visual input (focused static frames), and use the "Text-Image Multimodal Large Model" to output dialogue

Infrared detection sensors sense and microphones sense biological activities, and actively interact with users

Emotional expression:

Multimodal emotional expression output through the body and hand stepper motor, LCD display (two eyes), and speakers

Placement and carry method:

Desktop, bedside place, hug